Posted on October 6, 2019

| Back to Showreel

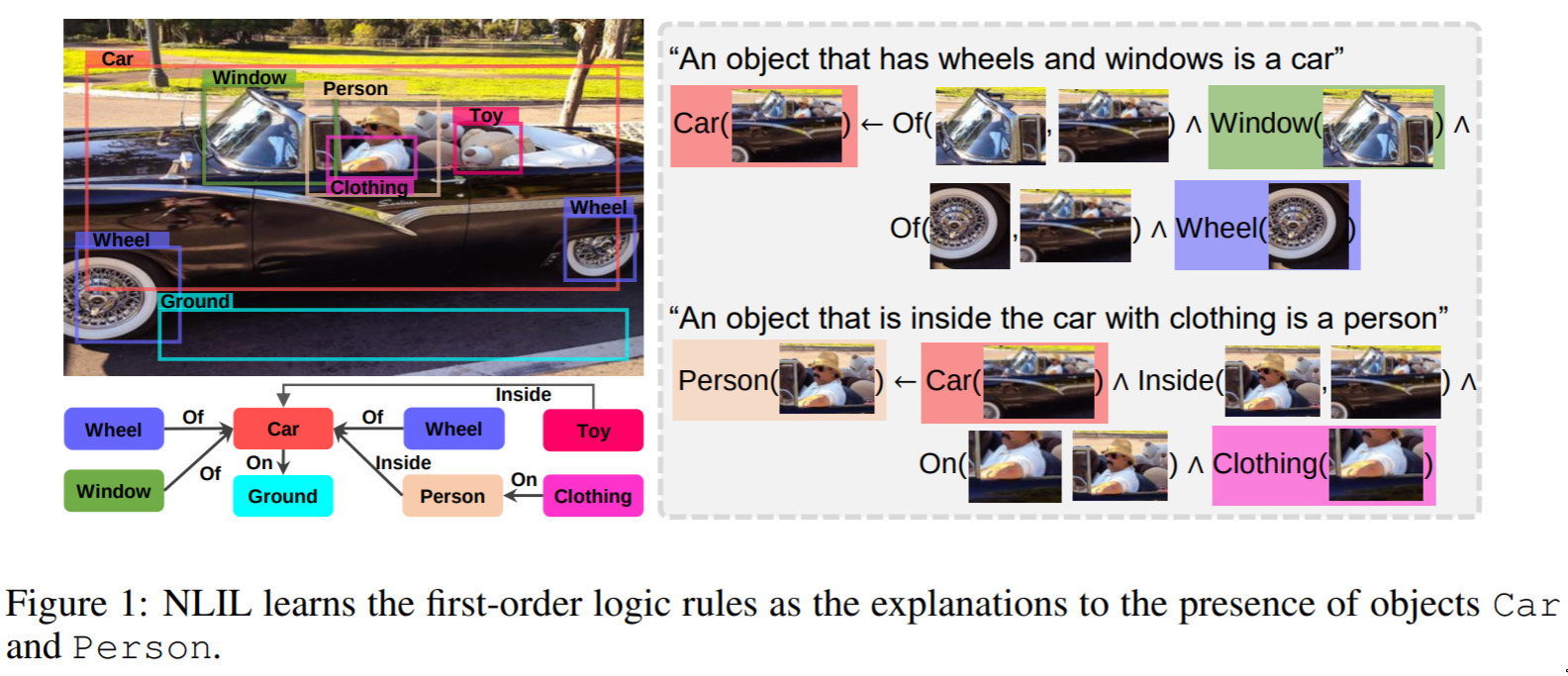

Learn to explain efficiently via neural logic inductive learning

Along the lines of explainability, this is a fascinating and important step. Consider: How do you know that something is a car? What makes a person a person? This paper attempts to address this problem by a kind of “constructivist” approach. I.e. a car is a car because it has wheels.

I think this kind of logical reasoning will be increasingly important as models make richer decisions, and as we seek to get solid explanations from our models.