Learning to understand videos for answering questions

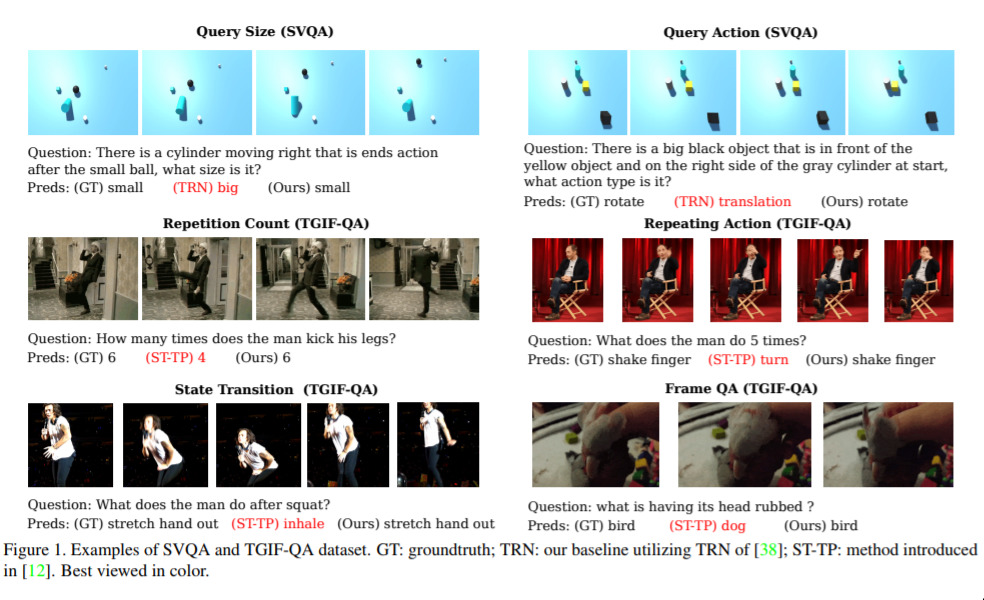

Videos are becoming increasingly prolific on the internet. Naturally, then, it makes sense that researchers are spending time trying to understand them. One particular area of research is so-called “Visual queastion-answering”. The point is to train a network to be able to watch a video, then answer questions (via text) about what happened in the video. Some examples are provided in the image above.

This work introduces a nice idea to this area, one that we’re seeing frequently on the showreel, namely: building up a rich representation first, and then using that representation to further refine answers. This should be a bit similar, conceptually, to the “Scene Graph” work, for example.

It’s also neat that the researchers are from Deakin!