Quantum Gradient Algorithm for General Polynomials

The idea here is that, in principle, if you have a lot of parameters in your function, then the time to compute the entire gradient w.r.t. all the parameters will scale like the number of parameters. This is bad when you have billions of parameters; so mostly in optimisation we focus on stochastic gradient descent; i.e. just looking at a small number of parameters at any one time.

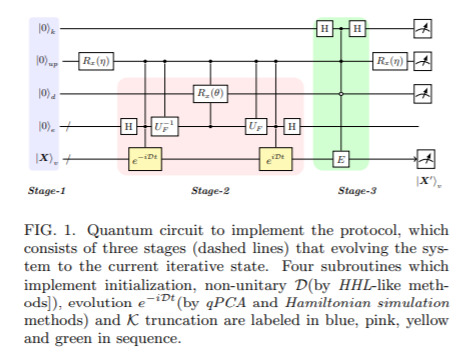

Here, the idea is that we could use quantum computers to do the full computation significantly faster. In this, and algorithm is introduced that in fact achieves this, for general polynomials (perhaps a step towards achieving it for full neural networks).