TensorFlow.js - How to easily deploy deep learning models

In the following we’ll suppose you’ve trained a model in Python, and are now considering how to “productionise” the inference. The point of the article will be to convince you that it’s interesting to try and use TensorFlow.js.

You’ve got a model, how to deploy it?

Once we’ve built a deep learning model, the next thing we natually want to do is deploy it to the people that want to use it.

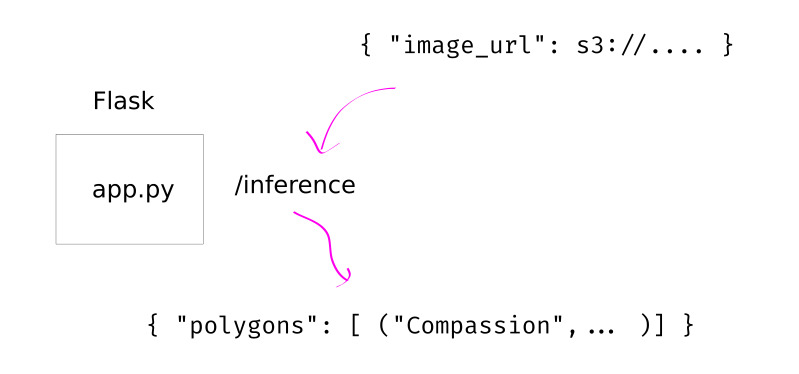

We can typically make this as hard as we like, by first of all just building say a simple, say in Python, flask API endpoint, /inference, that will take in a json blob, and then push it into our model, and push back an inference:

To something quite elaborate with various Amazon servers, Azure servers, Google servers, or the myriad other services providing deep learning inference and hosted model capability.

But there are a few problems with this approach:

- You need a significant dev-ops capability to manage just the operating-system and data-management capabilities,

- You need GPU resources (virtual or otherwise) to allocate for the inference,

- Even having the GPU capability, you need to manage versioning of your models, how to swap them out,

- And, you need to know how to ration and allocate the GPU resources between all your inference servers,

The point is, there’s much to do. In my exerience, this is infact a significant barrier/expense in deploying ML in production today.

Now, it may turn out that in your world you need to do this kind of deployment. But what I would like to think about briefly, is another approach …

Have you considered … JavaScript?

So, what if I told you that you don’t need to set up any computers, and that in fact the computers you use will be entirely managed and set up by other people!? Great! What’s the catch? Well, you need to use JavaScript …

The setup is that you want to do some inference on a “small” amount of user-supplied data. I.e. an image, a passage of text, even a video, or a piece of audio, but perhaps not 5GB of satellite images.

Then, the plan is like so:

- You train your model as you typically would (in TensorFlow, let’s say),

- You export the weights,

- You build the “inference” part of your model in TensorFlow.js

- The model runs in the browser,

- That’s it!

The main idea is, just as we can build our entire model in TensorFlow, we can build the so-called “forward” part of our model in TensorFlow.js; that is, just those parts that are needed for inference, and not the parts are necessary for training and to support training. Then, we simply rebuild that part of our model using the TensorFlow.js standard library (such as tanh layers, or whatever our particular network requires).

Then, if we’ve stayed in the TensorFlow ecosystem it’s quite easy, we can just load in our weights basically immediately, but even if we haven’t, ultimately we can always map our exported weights into our network.

Once that’s done, you’re basically done. You only need to work out how to get your data into the browser to do inference on. Typically, if you’re working with images or video, this is easy. You can use the webcam, if you want a live stream, and really the world is your oyster in all the typical ways that it is on the web.

The upsides and downsides

The upsides are:

- The model doesn’t run on your hardware ⇒ less resources required to deploy. In other words, if you want to handle 10,000 requests per minute, then you need significant GPU compute if you host the model on the server; but in this approach, you don’t need any additional compute; it all happens in the users computer.

- Can utilise the exact same deployment and versioning techniques (with large static content; namely the weights) that you use for standard websites, which are tried and tested .

- If the model is fast enough, can allow for very powerful “live” interaction by users.

- Incredibly portable, as it runs in the browser, it can run on phones, small laptops, laptops with GPUs (and utilise those GPUs) and can be run entirely offline.

The downsides are:

- You need to maintain a consistency between your Python models and your JavaScript models.

- Because the model doesn’t run on hardware you control, the speed of inference can vary wildly.

- In order to deal with the speed issue, we typically run a “smaller” or “compressed” model in the browser, which can be less accurate

Final thoughts

Browser-based deep learning won’t be for everyone, but my feeling is that if you’re out in the world applying deep learning, you can probably find a way to run some kind of model in the browser.

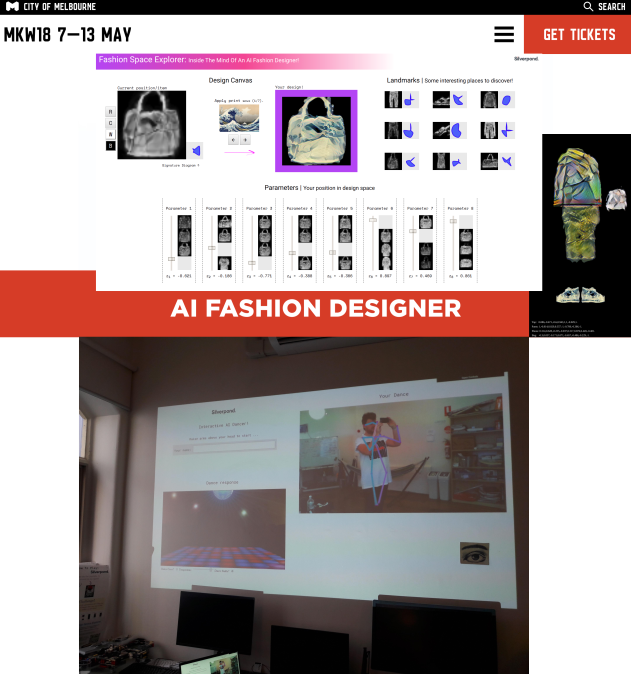

Furthermore, the kinds of interactions you can get with users by having a “real-time” inference model are wildly different than from a slow web-request model, or an unknown amount of time if queues are involved. Notably, this kind of real-time interaction allowed me, previously, to build an interactive fashion design booth, where the audience could engage with a GAN, and also an interactive dance booth, where people go to dance with an AI.

We were able to put together both of these projects much faster, and much more portably, because we were using JavaScript-based deep learning inference.

So I encourage you to take a look at TensorFlow.js, and try and build something with it!